Today, we will talk about performing Seasonal Decomposition using Tableau 8.1's new R functionality. Seasonal Decomposition is the process by which you determine what patterns over time exist in your data. As usual, we will be using the Superstore Sales sample data set from Tableau.

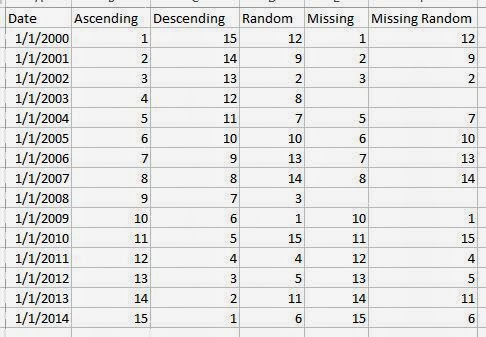

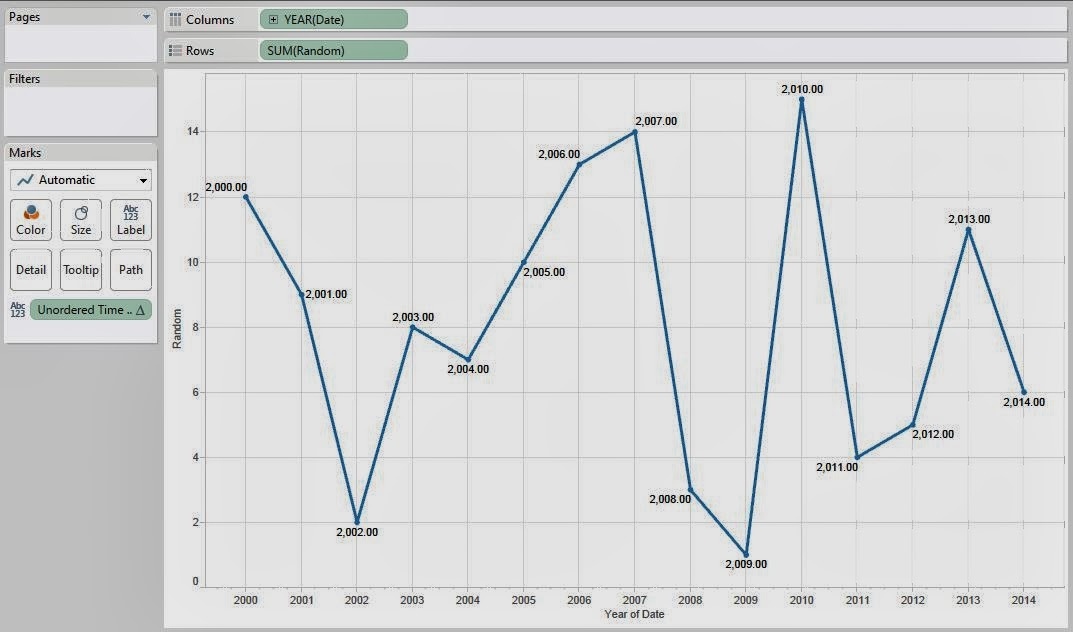

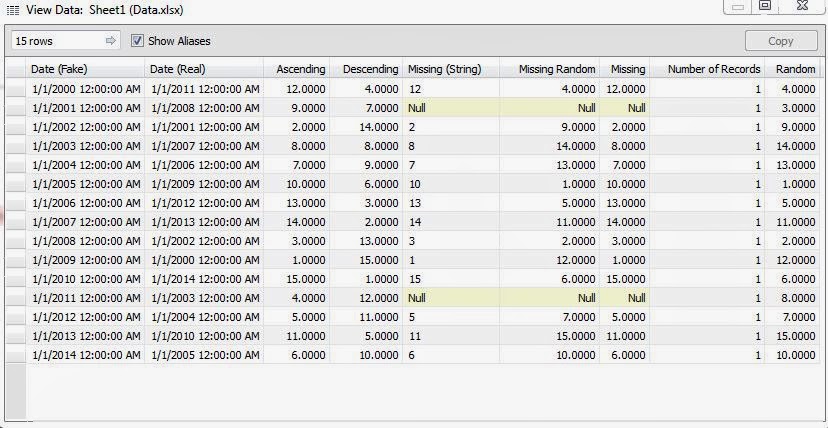

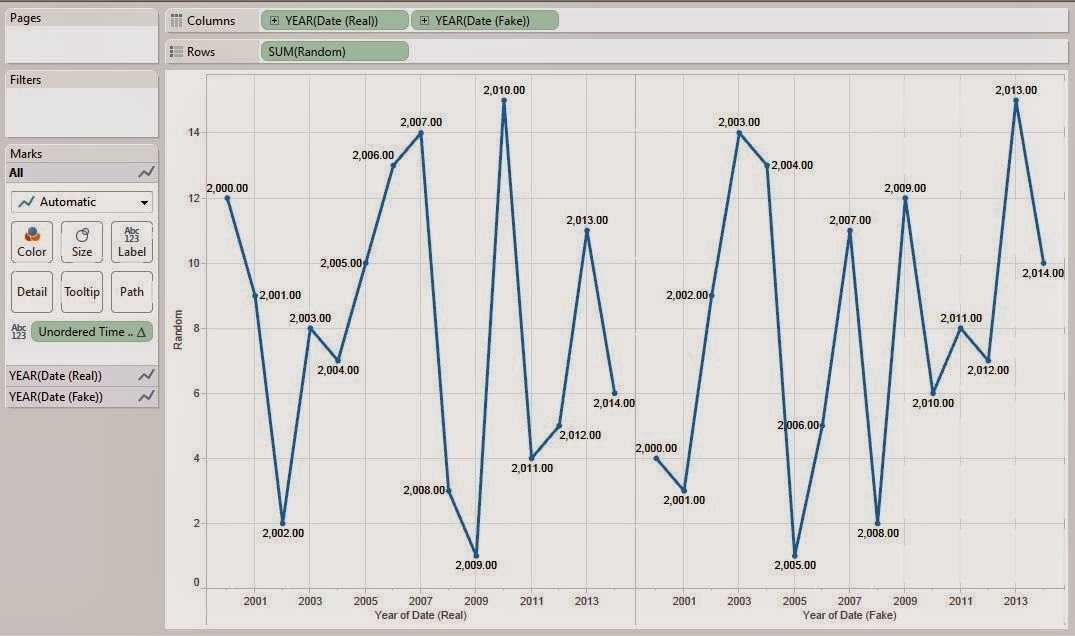

Let's start by looking at our data.

|

| Sales by Month |

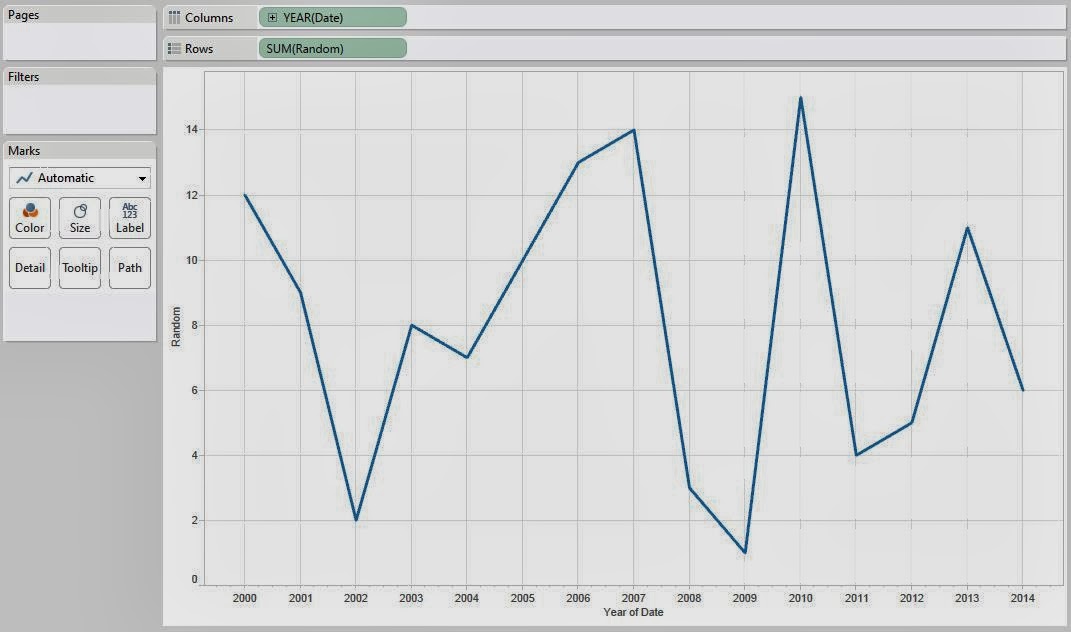

We can see that there is a definite "up-and-down" motion to the data. This motion is known as "seasonality". There also seems to be a very subtle downward trend to the data. However, all of this relies on our eyes. Let's use R to give us an actual decomposition. It's important to note that this technique isn't necessarily used for prediction. Therefore, the code is somewhat simplified because we won't be holding back observations. Here's the code for Sales (Trend):

SCRIPT_REAL("

library(forecast)

year <- .arg1

month <- .arg2

sales <- .arg3

len <- length(sales)

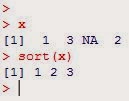

## Sorting the Data

date <- year + month / 12

dat <- cbind(year, month, sales)[sort(date, index.return = TRUE)$ix,]

## Decomposing the Time Series

timeser <- ts(dat[,3], start = c(dat[1,1], dat[1,2]), end = c(dat[len,1], dat[len,2]), frequency = 12)

as.vector(stl(timeser, s.window = 'period')[[1]][,2])

",

ATTR( YEAR( [Order Date] ) ), ATTR( MONTH( [Order Date] ) ), SUM( [Sales] ) )

Now, let's see what it looks like.

.JPG) |

| Sales by Month (with Tableau and R Trends) |

On this chart, we chose to add Tableau's "Trend Line" as well for comparison. What Tableau calls a "Trend Line" is actually a Regression line, which we saw quite a bit of in the first few posts of this series. R's seasonal decomposition trend is an entirely different type of trend. As you can see, the season decomposition trend adds much more information that the "Trend Line" does. For any time series model, you don't want to consider the very early observations because they are based off of only a few observations. So, let's filter out the year 2009 using a lookup (this way it doesn't affect our R calculations) so that we can really see what's going on.

+(without+2009).JPG) |

| Sales by Month (with Tableau and R Trends) (without 2009) |

Now, we can see that Tableau's "Trend Line" seems to imply that sales are increasing. However, the seasonal decomposition trend shows that it's more of a subtle wave than an increasing line. In cases of time series data, the Regression-based "Trend Line" that Tableau uses could lead to some faulty assessments. To be fair to Tableau, this "Trend Line" is perfect for scatterplots.

.JPG) |

| Sales by Quantity (with Trend) |

Now that we've seen the overall trend, let's look at the seasonality. As usual, the code is identical except for the last line. The rest of the code can be found in the appendix at the end of the post.

as.vector(stl(timeser, s.window = 'period')[[1]][,1])

Let's see what it looks like.

.JPG) |

| Sales by Month (with Seasonality) |

We see that the bottom chart repeats every year. So, we can use this to say that our sales peak in late autumn and winter (September - January). Strangely enough, our sales are average in November. Perhaps there's some driving factor to this. Maybe people are saving up money in November to buy Christmas presents in December. Alas, we digress. We can also see that sales are low in late spring and summer (April - August). This is hugely impactful information that you might not have been able to determine using other methods. On another note, imagine that we ran a huge sale in November 2011. How do we tell if the sale gave us higher than expected returns? Seasonal Decomposition can help here too. Here the last line of code for Sales (Remainder)

as.vector(stl(timeser, s.window = 'period')[[1]][,3])

.png) |

| Sales by Month (with Remainder) |

This chart shows us what was left once the Trend and Seasonality were removed, in other words, the remainder. So, we see that November 2011 had sales significantly higher than expected. Can this be explained by the sale we were running? It's certainly seems so. Of course, our analysis wouldn't end here if we were actually analyzing this. Alas, this is just a demonstration.

Finally, let's look at a way to predict new observations using seasonal decomposition. With seasonal decomposition, our predictions would always be equal to the trend plus the seasonal effect. For new observations, we will always have the seasonal effect because it repeats every year. However, we won't have the trend. There are plenty of different methods you could come up with to determine the trend. For this method, we'll just say that the trend for new observations is equal to the last trend we know (December 2012). So, once we add a few blank lines to our data set (which we've done before to create new observations), we can create our calculation. Here are the last few lines:

seasdec <- stl(timeser, s.window = 'period')

for(i in 1:len.new){if(dat.new[i,2] == month.orig[len.new + 1]){start <- i; break;}}

pred <- seasdec[[1]][start:(start + hold.orig[1] - 1),1] + seasdec[[1]][len.new,2]

c(rep(NA,len.new),pred)

Let's see what it looks like.

.JPG) |

| Sales by Month (With Forecast) |

We can see that the forecasting process seems to be working. It spikes in January and falls towards the middle of the year, which is what we expected. In fairness, we're convinced that the usefulness of this procedure is in the analysis using remainders and seasonality, not in the forecasting. Hopefully, this piqued your interest to give it a whirl. Thanks for reading. We hope you found this informative.

Brad Llewellyn

Data Analytics Consultant

Mariner, LLC

llewellyn.wb@gmail.com

https://www.linkedin.com/in/bradllewellyn

Appendix

Sales (Trend)

SCRIPT_REAL("

library(forecast)

year <- .arg1

month <- .arg2

sales <- .arg3

len <- length(sales)

## Sorting the Data

date <- year + month / 12

dat <- cbind(year, month, sales)[sort(date, index.return = TRUE)$ix,]

## Decomposing the Time Series

timeser <- ts(dat[,3], start = c(dat[1,1], dat[1,2]), end = c(dat[len,1], dat[len,2]), frequency = 12)

as.vector(stl(timeser, s.window = 'period')[[1]][,2])

",

ATTR( YEAR( [Order Date] ) ), ATTR( MONTH( [Order Date] ) ), SUM( [Sales] ) )

Sales (Seasonality)

SCRIPT_REAL("

library(forecast)

year <- .arg1

month <- .arg2

sales <- .arg3

len <- length(sales)

## Sorting the Data

date <- year + month / 12

dat <- cbind(year, month, sales)[sort(date, index.return = TRUE)$ix,]

## Decomposing the Time Series

timeser <- ts(dat[,3], start = c(dat[1,1], dat[1,2]), end = c(dat[len,1], dat[len,2]), frequency = 12)

as.vector(stl(timeser, s.window = 'period')[[1]][,1])

",

ATTR( YEAR( [Order Date] ) ), ATTR( MONTH( [Order Date] ) ), SUM( [Sales] ) )

Sales (Remainder)

SCRIPT_REAL("

library(forecast)

year <- .arg1

month <- .arg2

sales <- .arg3

len <- length(sales)

## Sorting the Data

date <- year + month / 12

dat <- cbind(year, month, sales)[sort(date, index.return = TRUE)$ix,]

## Decomposing the Time Series

timeser <- ts(dat[,3], start = c(dat[1,1], dat[1,2]), end = c(dat[len,1], dat[len,2]), frequency = 12)

as.vector(stl(timeser, s.window = 'period')[[1]][,3])

",

ATTR( YEAR( [Order Date] ) ), ATTR( MONTH( [Order Date] ) ), SUM( [Sales] ) )

Sales (Forecast)

SCRIPT_REAL("

library(forecast)

## Creating vectors

hold.orig <- .arg4

len.orig <- length( hold.orig )

len.new <- len.orig - hold.orig[1]

year.orig <- .arg1

month.orig <- .arg2

sales.orig <- .arg3

## Sorting the Data

date.orig <- year.orig + month.orig / 12

dat.orig <- cbind(year.orig, month.orig, sales.orig)[sort(date.orig, index.return = TRUE)$ix,]

dat.new <- dat.orig[1:len.new,]

## Decomposing the Time Series

timeser <- ts(dat.new[,3], start = c(dat.new[1,1], dat.new[1,2]), end = c(dat.new[len.new,1], dat.new[len.new,2]), frequency = 12)

seasdec <- stl(timeser, s.window = 'period')

## Predicting the new Observations

for(i in 1:len.new){if(dat.new[i,2] == month.orig[len.new + 1]){start <- i; break;}}

pred <- seasdec[[1]][start:(start + hold.orig[1] - 1),1] + seasdec[[1]][len.new,2]

c(rep(NA,len.new),pred)

",

ATTR( YEAR( [Order Date] ) ), ATTR( MONTH( [Order Date] ) ), SUM( [Sales] ), [Months to Forecast] )

.JPG)

.JPG)

.JPG)

.JPG)

+by+Year.JPG)

.JPG)

+by+Year.JPG)

.JPG)

+by+Year.JPG)

.JPG)

+by+Year.JPG)

.JPG)

+by+Year.JPG)

.JPG)

+by+Year.JPG)

.JPG)

+by+Year.JPG)

.JPG)

+by+Y+ear.JPG)

.JPG)

.JPG)

.JPG)

..JPG)

+with+Labels.JPG)

.JPG)

.JPG)

.JPG)

+(without+2009).JPG)

.JPG)

.JPG)

.png)

.JPG)